Data-driven testing is an approach where test inputs and expected results are stored externally, separate from the test scripts themselves. Instead of hardcoding values, our tests read this data and use it to drive their behavior. This method allows us to run the same test multiple times with different data sets, significantly expanding our test coverage without duplicating code.

But wait, why should I care?

Imagine you’re testing a finance application with various scenarios based on different countries, currencies and transaction types. Creating separate tests for each combination would be time-consuming and hard to maintain. It might work well initially, but as our application grows and the number of scenarios increases, we start to feel the limitations of this approach. This is where data-driven testing shines.

What We’re Trying to Achieve

In this guide, we’ll explore how to implement data-driven tests using Playwright, an open source automation library for end-to-end testing of modern web apps. We’ll start with a simple, hardcoded test and gradually evolve it into a flexible, data-driven solution. Along the way, we’ll discuss different approaches, from basic JSON variables to dynamic data sources, helping you choose the right method for your project.

Overview of Our Use Case

Our application is a salary insights tool, designed to provide real-time global market rates for various roles across different countries. This tool is crucial for making fair and competitive international offers when hiring or comparing average compensation.

The interface consists of two main dropdown menus:

- Role selection: Users can choose from a variety of job roles such as accountant, account executive, account manager, etc.

- Country selection: Users can select from a long list of countries, each represented by its flag and name.

The challenge in testing this application lies in the following aspects:

- Data variety: There are numerous combinations of roles and countries, each potentially yielding different salary insights.

- Data validity: Some roles might not be available in certain countries or salary data might not be complete for all combinations.

- Data accuracy: It’s critical to ensure that the salary insights provided are accurate for each valid role-country combination.

- Maintenance: As new roles or countries are added, or as salary data is updated, our tests need to adapt without requiring extensive code changes.

Using a data-driven approach for testing this application is ideal because:

- It allows us to test multiple combinations of roles and countries without duplicating test code.

- We can easily update or expand our test data as new roles or countries are added to the system.

- It helps in managing the complexity of validating different data scenarios efficiently.

- It makes our tests more maintainable and less prone to errors that might occur with hardcoded values.

Setting Up Our Playwright Test

Prerequisite: Make sure npm is installed on your machine.

To set up a Playwright test project, follow these steps:

- Initialize a new Playwright project:

npm init playwright@latest

This command will guide you through the setup process, allowing you to choose between TypeScript and JavaScript, name your tests folder and set up GitHub Actions for CI.

playwright.config.ts

package.json

tests/

example.spec.ts

Our Initial Test (Not Data-Driven)

Now, let’s create our initial test for the salary insights tool. This test will be a simple, non-data-driven version that checks the functionality for a single role and country combination.

Create a new file in your tests folder called salary-insights.spec.ts:

import { test, expect } from '@playwright/test';

test('Salary Insights Test - Simple', async ({ page }) => {

// Navigate to the Salary Insights page

await page.goto("/dev/salary-insights");

await page.waitForLoadState("load");

const role = "QA Engineer";

const country = "Canada";

// Select Role

await page.locator('role-dropdown-selector').click();

await page.fill('role-input-selector', role);

await page.click(`text=${role}`);

// Select Country

await page.click('country-dropdown-selector');

await page.fill('country-input-selector', country);

await page.click(`text=${country}`);

// Click Search

await page.click('search-button-selector');

// Assertions

await expect(page.locator('filter-container').getByText(role)).toBeVisible();

await expect(page.locator('filter-container').getByText(country)).toBeVisible();

const compensationDetails = await page.locator('compensation-details-selector').innerText();

expect(compensationDetails).toContain(role);

expect(compensationDetails).toContain(country);

const compensationInfo = await page.locator('compensation-info-selector').innerText();

expect(compensationInfo).toContain(country);

});

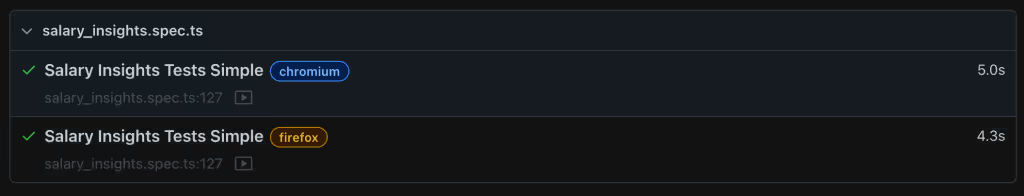

This test does the following:

- Navigates to the salary insights page

- Waits for the page and salary data to load

- Selects a specific role (QA Engineer)and country (Canada)

- Clicks the search button

- Verifies that the selected role and country are visible in the results

- Checks that the compensation details and info contain the correct role and country

To run your playwright test, use the command:

npx playwright test

Results:

This initial test is a good starting point, but it’s limited to testing only one combination of role and country. In the next sections, we’ll explore how to transform this into a data-driven test to cover multiple scenarios efficiently.

Adapting for Data-Driven Tests — The Plan and Mindset

The key idea behind data-driven testing is to separate the test logic from the test data. This separation allows us to:

- Reuse the same test logic for multiple scenarios

- Easily add or modify test cases without changing the test code

- Improve test maintainability and readability

When adapting to data-driven tests, we should:

- Identify the variables in our test (in this case, role and country)

- Extract these variables into a separate data structure

- Modify our test to iterate over this data structure

Let’s implement this approach using a simple, albeit naive, solution with JSON variables.

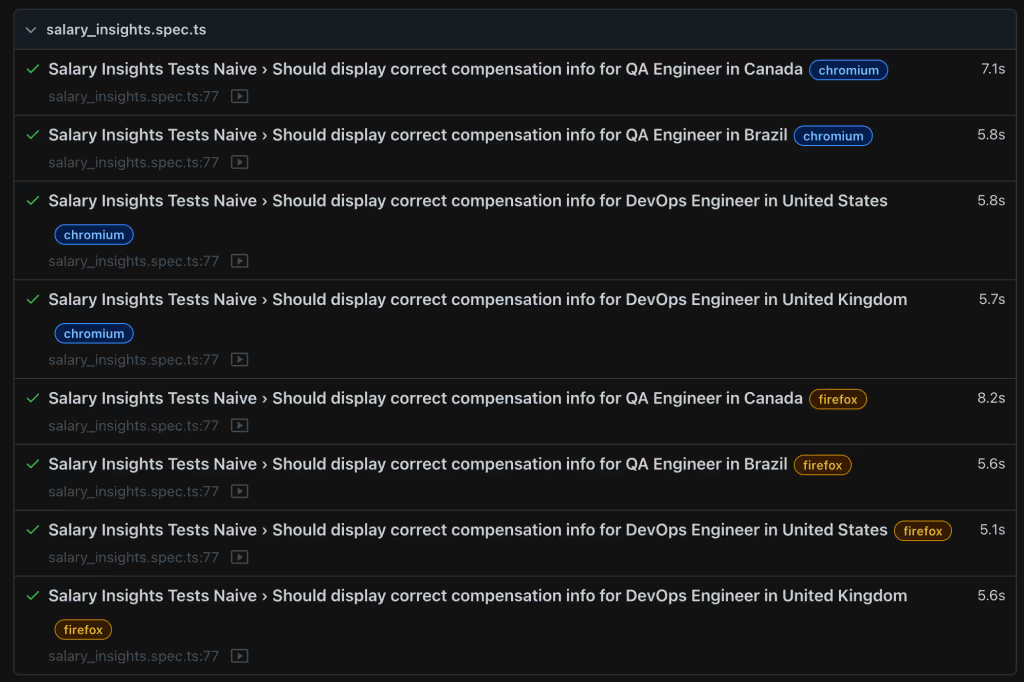

Naive Approach Using JSON Variables

This approach uses a JSON array to store our test data and iterates over it to create multiple test cases. Here’s how we can implement it:

// Declare a JSON array of test data

const testData = [

{ role: "QA Engineer", country: "Canada" },

{ role: "QA Engineer", country: "Brazil" },

{ role: "DevOps Engineer", country: "United States" },

{ role: "DevOps Engineer", country: "United Kingdom" },

];

test.describe("Salary Insights Tests Naive", () => {

testData.forEach(({ role, country }) => {

test(`Should display correct compensation info for ${role} in ${country}`, async ({ page }) => {

await page.goto("/dev/salary-insights");

await page.waitForLoadState("load");

// Select Role

await page.locator('role-dropdown-selector').click();

await page.fill('role-input-selector', role);

await page.click(`text=${role}`);

// ... (rest of your test logic)

});

});

});

This approach offers several benefits:

- We can easily add more test cases to the testData array.

- The test logic remains the same for all scenarios, reducing code duplication.

- Test cases are more readable, as each combination of role and country creates a separate test.

However, this approach has limitations:

- The test data is still within the test file, which can become unwieldy for larger datasets.

- It doesn’t separate the test data from the test code entirely, making it harder to manage test data independently.

- It may not be suitable for very large data sets or when test data needs to be managed by non-technical team members.

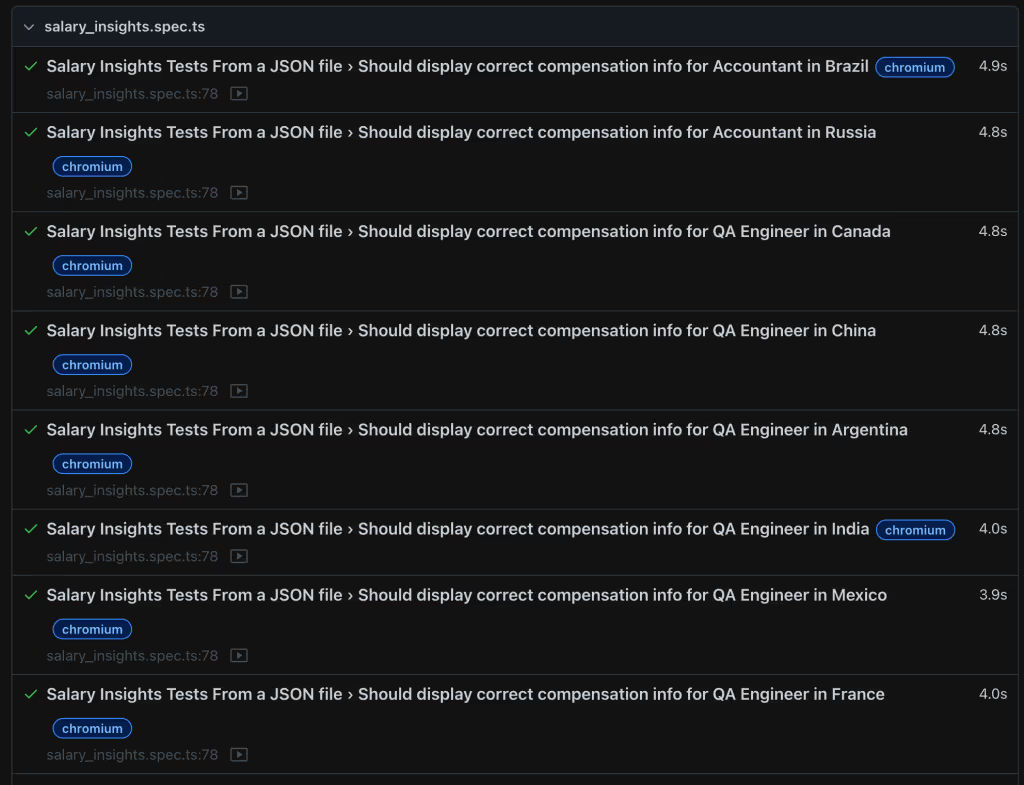

A Somewhat Cleaner Approach — Using a JSON File

This approach involves storing test data in an external JSON file and reading it into our test script. Here’s how we can implement it:

- Create a JSON file for test data:

Create a file named salary_insights.json in a data directory within your test folder:

[

{ "role": "QA Engineer", "country": "Canada" },

{ "role": "QA Engineer", "country": "Brazil" },

{ "role": "DevOps Engineer", "country": "United States" },

{ "role": "DevOps Engineer", "country": "United Kingdom" }

]

- Modify the test script to read from the JSON file:

import { test, expect } from '@playwright/test';

import * as fs from 'fs';

import * as path from 'path';

// Define the type for the test data

interface TestData {

role: string;

country: string;

}

// Load test data from JSON file

const testDataPath = path.resolve(__dirname, "data", "salary_insights.json");

let salaryTestData: TestData[];

try {

const rawData = fs.readFileSync(testDataPath, "utf8");

salaryTestData = JSON.parse(rawData) as TestData[];

} catch (error) {

console.error("Error reading or parsing salary_insights.json:", error);

process.exit(1); // Exit the process with an error code

}

test.describe("Salary Insights Tests From a JSON file", () => {

salaryTestData.forEach(({ role, country }) => {

test(`Should display correct compensation info for ${role} in ${country}`, async ({ page }) => {

await page.goto("/dev/salary-insights");

await page.waitForLoadState("load");

// Select Role

await page.locator('role-dropdown-selector').click();

await page.fill('role-input-selector', role);

await page.click(`text=${role}`);

// ... (rest of your test logic)

});

});

});

For my test result, I added some more data to my JSON for variety.

Using a JSON file for data-driven tests offers significant advantages. It separates test data from logic, enhancing maintainability and scalability. The approach improves readability, allows non-technical team members to manage test cases and enables data reuse across different test types.

This method also paves the way for dynamic data-driven testing. You can fetch data from remote sources, implement real-time updates, use environment-specific data, automate data generation and version control your test data independently. These capabilities make your testing process more flexible and responsive to changing requirements.

Dynamic Data-Driven Tests

Dynamic data-driven testing is crucial in today’s fast-paced development environments. It allows you to quickly adapt your tests to changing business rules, new features or data updates without modifying your test code. This approach is particularly valuable in CI/CD pipelines, where test failures can block deployments and frustrate developers.

Consider this scenario: Your company frequently updates its salary benchmarks based on market trends. Traditional hardcoded tests would require constant updates, risking pipeline failures and delays. Dynamic data-driven tests solve this by fetching the latest data at runtime.

Implementing Dynamic Data Sources

While options like S3, GitHub or public file services are viable, using an API offers superior flexibility and control. Let’s demonstrate using a Postman mock collection, which simulates an API for managing test data.

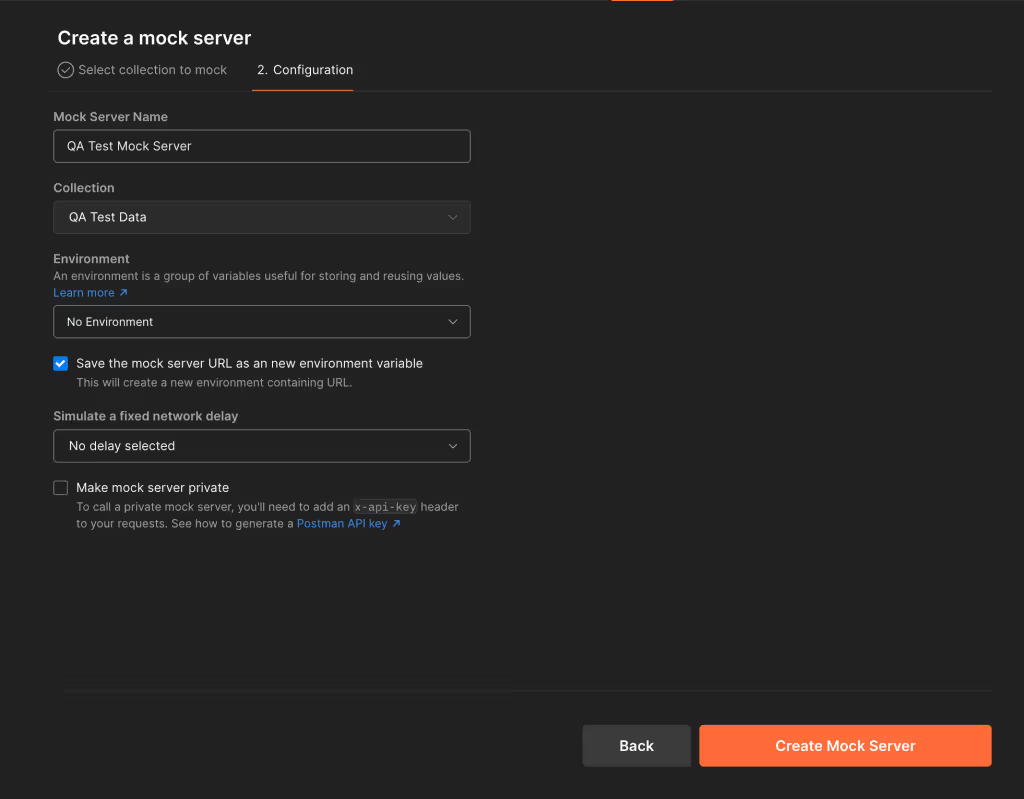

Creating a Postman Mock Collection for Dynamic Test Data

Using a mock collection in Postman provides a flexible, manageable way to serve test data. Here’s a quick guide to get you started:

- Set up a new collection in Postman named “QA Test Data.”

- Create a GET request with the endpoint

/test-data/salary-insights-data. - In the request’s “Examples” tab, add your test data in JSON format.

- Create a mock server for your collection.

- Save your mock server URL as an environment variable for easy access.

For an in-depth guide on creating mock servers in Postman, check out the official documentation.

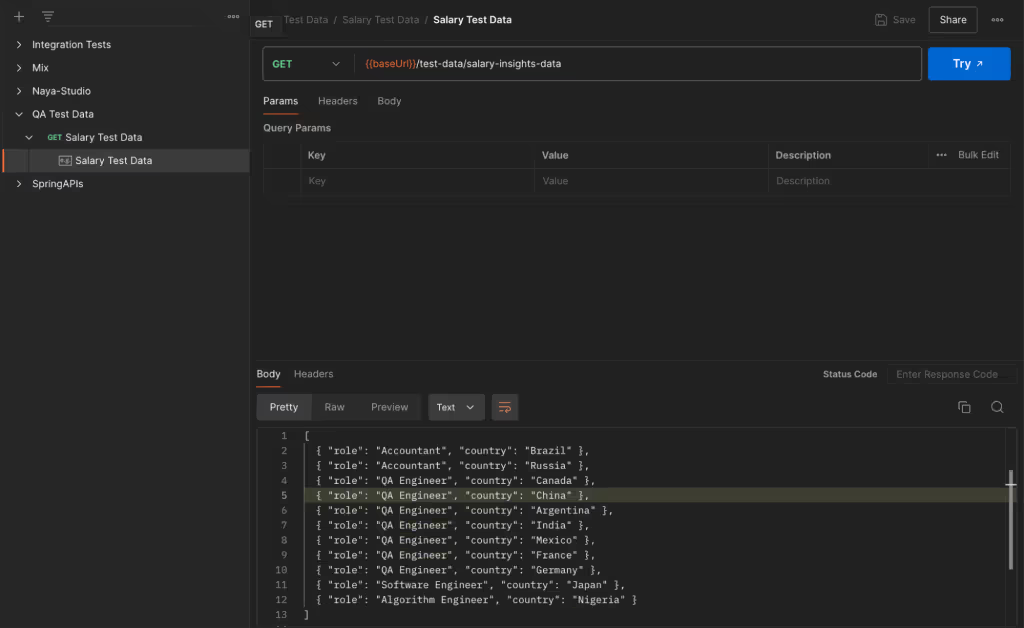

Once set up, your mock server will serve the test data you’ve defined, as shown in figure 2 above. This data can now be dynamically fetched and used in your Playwright tests, allowing for flexible, environment-specific testing scenarios.

By using this method, you can easily update test data without modifying your test code, making your data-driven tests more maintainable and adaptable to changing requirements.

Update Your Playwright Test:

import { test, expect } from '@playwright/test';

import axios from 'axios';

interface TestData {

role: string;

country: string;

}

async function fetchTestData(): Promise<TestData[]> {

const baseUrl = 'https://8e461e41-9dbd-412d-b57b-1b18cd66eb8e.mock.pstmn.io';

const response = await axios.get(`${baseUrl}/test-data/salary-insights-data`);

return response.data;

}

test.describe('Dynamic Salary Insights Tests', () => {

let testData: TestData[];

test.beforeAll(async () => {

testData = await fetchTestData();

});

for (const { role, country } of testData) {

test(`Should display correct compensation info for ${role} in ${country}`, async ({ page }) => {

await page.goto("/dev/salary-insights");

await page.waitForLoadState("load");

// Select Role

await page.locator('role-dropdown-selector').click();

await page.fill('role-input-selector', role);

await page.click(`text=${role}`);

// ... (rest of your test logic)

});

}

});

This approach offers several benefits:

- Real-time updates: Tests always use the latest data.

- Collaboration: Team members can update test data via Postman, without touching code.

- Version control: Track data changes over time in Postman.

- Environment management: Easily switch between data sets for different environments.

Best Practices for Managing Test Data Across Environments

When implementing data-driven tests, managing test data across different environments like development, staging and QA is crucial. This approach ensures that your tests are consistent and relevant across your entire development pipeline. Let’s explore how to achieve this using environment variables and API endpoints.

Environment-Specific Test Data

To handle different environments, we can use environment variables to dynamically select the appropriate API endpoint for our test data. Here’s how you can implement this in your Playwright tests:

import { test, expect } from '@playwright/test';

import axios from 'axios';

interface TestData {

role: string;

country: string;

}

const API_BASE_URL = process.env.TEST_DATA_API_URL || 'https://default-api-url.com';

async function fetchTestData(environment: string): Promise<TestData[]> {

const response = await axios.get(`${API_BASE_URL}/${environment}/test-data/salary-insights-data`);

return response.data;

}

test.describe('Salary Insights Tests Across Environments', () => {

let testData: TestData[];

const environment = process.env.TEST_ENVIRONMENT || 'development';

test.beforeAll(async () => {

testData = await fetchTestData(environment);

});

for (const { role, country } of testData) {

test(`Should display correct compensation info for ${role} in ${country} (${environment})`, async ({ page }) => {

await page.goto("/dev/salary-insights");

await page.waitForLoadState("load");

// Select Role

await page.locator('role-dropdown-selector').click();

await page.fill('role-input-selector', role);

await page.click(`text=${role}`);

// ... (rest of your test logic)

});

}

});

In this setup, you can easily switch between environments by setting the TEST_ENVIRONMENT variable. This flexibility is particularly useful in CI/CD pipelines where you might run tests against different environments sequentially.

Using GitHub Actions

If you’re using GitHub Actions for your CI/CD pipeline, you can use environment secrets and variables to manage your API URLs and test environments securely. Here’s an example workflow:

name: Run Tests

on: [push]

jobs:

test:

runs-on: ubuntu-latest

strategy:

matrix:

environment: [development, qa, staging]

steps:

- uses: actions/checkout@v3

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: '18'

- name: Install dependencies

run: npm ci

- name: Run Playwright tests

env:

TEST_DATA_API_URL: ${{ secrets.TEST_DATA_API_URL }}

TEST_ENVIRONMENT: ${{ matrix.environment }}

run: |

if [[ "${{ github.ref }}" == "refs/heads/main" && "${{ matrix.environment }}" != "staging" ]]; then

echo "Skipping non-staging environments for main branch"

exit 0

fi

npx playwright test

This workflow does the following:

- Uses a matrix strategy to run tests against multiple environments (development, QA, staging).

- Sets up Node.js and installs dependencies.

- Uses the

TEST_DATA_API_URLsecret for the API base URL. - Sets the

TEST_ENVIRONMENTbased on the matrix environment. - Adds a conditional check to only run staging tests for the main branch, simulating a more realistic scenario where you might want to run comprehensive tests before merging to main.

This setup is more flexible and aligns better with the environment-specific test data approach we discussed earlier. It allows you to run tests against different environments in parallel, improving your CI/CD pipeline efficiency while maintaining the ability to use environment-specific test data.

By adopting these practices, you’re not just improving your current tests, you’re setting the stage for more sophisticated, adaptable testing strategies that can evolve with your application. Remember, the goal is to create a testing framework that’s as dynamic and responsive as your development process itself.

Data-driven organizations can outperform their competitors by 6% in profitability and 5% in productivity. What does it mean to be data-driven? And how can you navigate data as a leader? Check out our guide, “Navigating Data-Driven Leadership.”

About the author: Ememobong Akpanekpo

Ememobong Akpanekpo is a senior software engineer and technical leader at Andela, a private global talent marketplace. He drives innovation in cloud and mobile technologies to create products that delight users and deliver business value. With more than seven years of experience spanning Android, iOS and cloud platforms, Ememobong has led teams to build scalable, user-centric solutions that make a real impact.

.avif)

.png)